Top 10 Technology Trends In 2026 By Gartner: Strategic Insights For IT Outsourcing Leaders

Each year, Gartner consolidates insights from its IT Symposium/Xpo, global CIO surveys, and proprietary research to identify the technology trends in 2026 that will reshape enterprise strategy over the next five years. These technology trends signal where organizations will invest to drive efficiency, resilience, and competitive differentiation, spanning AI-native platforms, next-generation computing infrastructure, advanced cybersecurity frameworks, and sovereign data strategies.

In this blog, we analyze the top 10 technology trends in 2026 highlighted by Gartner, breaking down what each trend entails, why it is gaining momentum, real-world implications, and what it means specifically for IT leaders.

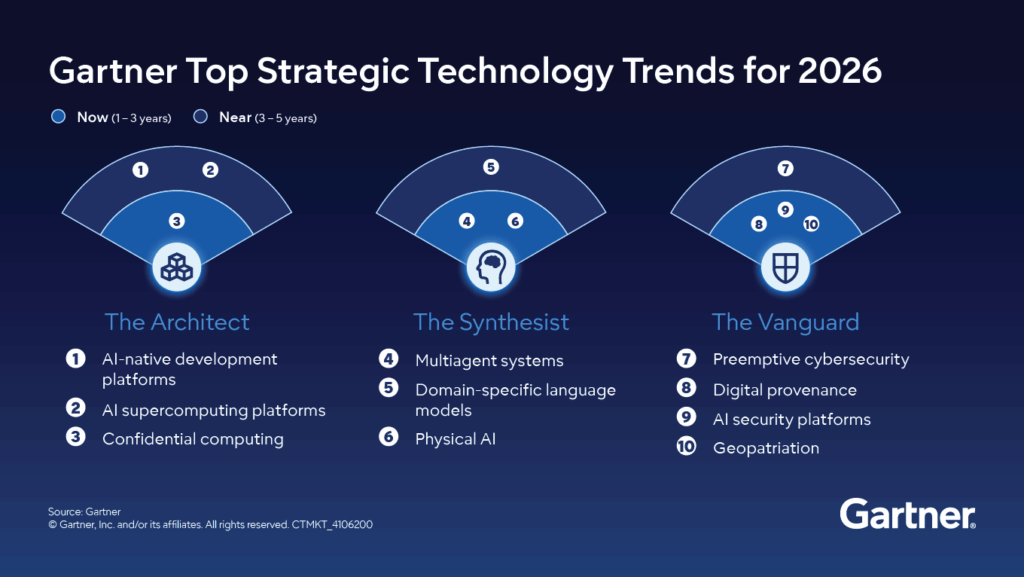

What are the 2026 Gartner Technology Trends?

Gartner’s strategic framework on technology trends is widely regarded as a benchmark for enterprise IT planning, influencing board-level investment decisions and long-term digital roadmaps across global markets. The technology trends identified for 2026 reflect a broader recalibration of enterprise priorities. They outline how enterprises must redesign digital foundations, institutionalize AI governance, and integrate advanced computing models to remain competitive in an increasingly complex and AI-driven global landscape.

The Architect: Building the Next-Generation Digital Foundation

The first theme, The Architect, focuses on the foundational platforms, infrastructure models, and computational capabilities that will underpin enterprise systems in the coming decade. This includes AI-native development environments, advanced computing architectures, and secure processing frameworks.

The core idea behind this category of technology trends is that traditional IT stacks are no longer sufficient to support AI-scale workloads, real-time analytics, and hyper-automation. Enterprises must rethink how applications are built, how computing power is distributed, and how sensitive data is processed.

The Synthesist: Combining Intelligence to Create New Business Value

The second, The Synthesist, centers on systems that generate new forms of business value by orchestrating multiple technologies simultaneously. Rather than deploying AI, automation, or analytics in silos, organizations are increasingly combining them into coordinated, intelligent ecosystems.

These technology trends emphasize collaboration between AI agents, domain-specialized models, and embedded intelligence across digital and physical environments. The result is operational systems that are autonomous, adaptive, and capable of continuous optimization.

The Vanguard: Trust, Risk, and Geopolitical Readiness

The third theme, The Vanguard, addresses one of the most pressing realities shaping tech trends in 2026, which is trust. As AI adoption accelerates and global regulations tighten, enterprises must embed governance, security, and geopolitical awareness into their core technology strategies.

This category of Gartner technology trends includes technologies focused on proactive cybersecurity, AI governance platforms, digital provenance, and sovereign infrastructure strategies. These trends recognize that innovation without control creates unacceptable risk.

A Strategic Blueprint for the Next Decade

In total, Gartner identifies 10 strategic technology trends under 3 themes that will shape enterprise IT agendas from 2026 through the end of the decade. Collectively, these technology trends illustrate how digital value will be:

- Built on AI-native and high-performance foundations

- Orchestrated through intelligent, multi-system collaboration

- Protected through advanced governance, security, and geopolitical alignment

For decision-makers evaluating IT outsourcing partnerships, understanding these Gartner technology trends is critical. They do not merely highlight emerging tools, they define where enterprise spending, transformation priorities, and competitive differentiation will concentrate in the coming years.

Top 10 Technology Trends According to Gartner

AI-Native Development Platforms

AI-native development platforms integrate generative AI and intelligent automation directly into the software development lifecycle (SDLC). Rather than relying solely on human coding effort, these platforms assist in requirements analysis, code generation, testing, debugging, documentation, and even architectural suggestions.

They combine large language models, domain-specific training data, and contextual project knowledge to generate production-ready code that can be iteratively refined. In many cases, AI acts as a real-time co-engineer, accelerating delivery cycles while reducing repetitive tasks across development teams.

Why It Matters:

The strategic shift lies in repositioning AI from a productivity tool to a structural component of engineering operations. Instead of using AI as an add-on, organizations are redesigning workflows around AI-assisted collaboration.

According to Gartner, by 2030, 80% of organizations are expected to restructure traditional engineering teams into smaller, more agile units supported by AI-native development capabilities.

Risk to Consider:

- Code reliability risk: AI-generated code may contain logical errors, hidden bugs, or inefficient architecture despite appearing syntactically correct.

- Security vulnerabilities: Models trained on insecure or outdated repositories can propagate unsafe coding patterns.

- Intellectual property exposure: Generated outputs may unintentionally replicate copyrighted or open-source code without proper licensing compliance.

- Governance gaps: Lack of audit trails and oversight over AI-generated contributions can create compliance and accountability challenges.

- Over-dependence on automation: Excessive reliance on AI tools may weaken core engineering expertise and reduce critical thinking within teams.

AI Supercomputing Platforms

AI supercomputing platforms are high-performance computing environments purpose-built for large-scale AI workloads. They combine GPUs, AI accelerators, high-speed networking, and distributed storage to support intensive tasks as training large language models, running complex simulations, and processing real-time analytics.

Unlike traditional enterprise infrastructure, these platforms are designed for massive parallel processing and often operate in hybrid models across cloud and on-premise environments.

Why It Matters:

AI is rapidly becoming infrastructure intensive. Training advanced AI models can require thousands of GPUs running continuously, pushing enterprises beyond conventional cloud setups. Gartner projects that by 2028, over 40% of leading organizations will adopt hybrid computing architectures to meet AI performance demands, a sharp increase from current levels.

This reflects a structural shift: AI capability is now directly tied to compute power and architectural design. Organizations without scalable, high-performance infrastructure will face slower innovation cycles and higher operational inefficiencies

Risks to Consider:

- High infrastructure cost and ongoing operational expenses

- Vendor lock-in within specific cloud or hardware ecosystems

- Energy consumption and sustainability pressure

- Specialized talent shortages in distributed AI architecture

- Data sovereignty and regulatory complexity in hybrid deployments

Confidential Computing

Confidential computing is a security paradigm that protects data while it is being processed, not only when stored (data at rest) or transmitted (data in transit). It leverages hardware-based Trusted Execution Environments (TEEs) to isolate sensitive workloads in encrypted memory regions, preventing unauthorized access, even from system administrators, cloud providers, or compromised operating systems.

In practical terms, this means enterprises can run sensitive analytics, AI models, or cross-border data processing workloads without exposing raw data to underlying infrastructure layers.

Why It Matters

As AI adoption accelerates and enterprises move mission-critical workloads to hybrid or multi-cloud environments, data exposure risks increase significantly. Traditional encryption models leave a vulnerability gap during runtime processing. Confidential computing addresses this gap directly.

This trend is gaining momentum as regulatory frameworks such as GDPR, financial compliance standards, and data localization laws tighten globally. Gartner forecasts that by the end of the decade, most sensitive workloads operating in untrusted environments will require confidential computing protections. In other words, trust is becoming a competitive differentiator, especially in industries like banking, healthcare, insurance, and government services.

Risks to Consider:

- Implementation complexity due to hardware and platform dependencies

- Limited ecosystem maturity across certain cloud providers

- Performance overhead in some high-intensity workloads

- Misconfiguration risks if security policies are not properly enforced

Multiagent Systems (MAS)

Multiagent Systems (MAS) refer to architectures where multiple AI agents operate collaboratively, or semi-autonomously to accomplish complex tasks. Instead of relying on a single monolithic model, MAS distributes responsibilities across specialized agents that can communicate, negotiate, and coordinate actions in real time.

Each agent is typically designed with a defined objective, access to specific data sources, and the ability to trigger actions. When orchestrated properly, these agents function as a coordinated digital workforce capable of handling multi-step workflows with minimal human intervention.

Why It Matters:

Large-scale AI initiatives increasingly require coordinated decision-making rather than isolated automation. A single monolithic model is rarely sufficient to manage layered business logic, cross-functional workflows, and real-time adaption. Multiagent Systems overcome this limitation by orchestrating specialized agents that collaborate, negotiate priorities, and adjust behavior dynamically.

A distributed intelligence model strengthens system resilience and contextual responsiveness. Decomposing tasks into modular agents enables organizations to redesign complex processes with greater flexibility and tighter control. Rather than merely accelerating execution, Multiagent Systems introduce granular oversight, continuous optimization, and adaptive coordination across interconnected operations.

Risk to Consider:

- Coordination complexity between agents leading to unpredictable outcomes

- Governance challenges in tracking decisions made by multiple autonomous entities

- Security vulnerabilities if inter-agent communication is not properly secured

- Escalating operational costs if orchestration is poorly optimized

Domain-Specific Language Models (DSLMs)

Domain-Specific Language Models (DSLMs) are language models trained or fine-tuned on specialized industry data such as finance, healthcare, legal, manufacturing, or energy. Unlike general-purpose large language models, DSLMs concentrate on domain terminology, regulatory requirements, structured knowledge, and sector-specific reasoning patterns.

They are typically developed by adapting foundation models with proprietary enterprise datasets, internal documentation, and curated industry knowledge bases to improve contextual precision.

Why It Matters:

As AI adoption expands into regulated and mission-critical workflows, output accuracy becomes a strategic requirement rather than a performance metric. Inaccurate or non-compliant responses can create legal exposure, operational disruption, or financial loss.

DSLMs improve reliability in specialized use cases by narrowing the model’s knowledge scope and strengthening alignment with domain constraints. This enables organizations to automate high-value professional tasks, enhance decision support systems, and integrate AI more confidently into core business operations.

Risks to Consider:

- High cost of acquiring and maintaining domain-grade training data

- Risk of bias or overfitting due to narrow datasets

- Limited transferability across industries

- Continuous governance and compliance validation required

Physical AI

Physical AI describes the deployment of artificial intelligence into embodied systems such as robotics, autonomous vehicles, industrial machinery, medical devices, and smart infrastructure. Rather than operating solely in digital environments, these systems combine computer vision, sensor fusion, edge computing, control algorithms, and machine learning to perceive surroundings and execute actions in real time.

The concept extends beyond traditional automation. Physical AI systems continuously interpret environmental signals, adapt behavior based on feedback loops, and optimize performance under dynamic conditions.

Why It Matters:

Operational competitiveness increasingly depends on intelligence embedded in physical systems rather than software alone. Manufacturing, logistics, and healthcare environments require machines that can perceive conditions, adjust parameters, and act autonomously in real time, reducing latency and improving efficiency.

Investment momentum reflects this shift. The International Federation of Robotics reports that global industrial robot installations exceeded 553,000 units in 2022, signalling sustained demand for AI-driven automation amid labor shortages and supply chain pressures.

Risks to Consider:

- High capital expenditure for robotics and edge infrastructure

- Safety and liability concerns in autonomous physical environments

- Integration complexity with legacy operational systems

- Cybersecurity exposure in connected industrial devices

- Model failure in unpredictable real-world conditions

Preemptive Cybersecurity

Preemptive Cybersecurity focuses on anticipating and neutralizing threats before they materialize into active incidents. Instead of reacting to breaches after detection, this approach leverages predictive analytics, AI-driven threat modeling, attack surface management, and continuous risk assessment to identify vulnerabilities and adversarial behaviors in advance.

Capabilities often include proactive threat intelligence integration, real-time attack path simulation, automated vulnerability validation, and behavioral anomaly detection across hybrid cloud and on-premise environments.

Why It Matters:

Cyber risk exposure expands as enterprises adopt cloud-native architectures, AI systems, APIs, and distributed infrastructures. Traditional perimeter-based defenses and reactive incident response models struggle to keep pace with increasingly sophisticated attack techniques.

Financial impact underscores the urgency. IBM’s Cost of a Data Breach Report 2024 indicates that the global average cost of a data breach reached USD 4.45 million, highlighting the escalating consequences of security failures. Proactively identifying exploitable weaknesses and potential attack paths reduces dwell time, limits lateral movement, and strengthens organizational resilience.

Risks to Consider:

- High dependency on accurate threat intelligence data

- False positives leading to unnecessary operational disruption

- Integration complexity across multi-cloud and legacy systems

- Continuous monitoring costs and infrastructure overhead

- Overreliance on automation without sufficient human oversight

Digital Provenance

Digital Provenance refers to technologies and frameworks that verify the origin, authenticity, and integrity of digital content, data, and AI-generated outputs. As synthetic media, generative AI, and automated content creation scale rapidly, establishing traceability becomes essential to distinguish verified information from manipulated or fabricated material.

Digital provenance mechanisms may include cryptographic watermarking, blockchain-based verification, metadata authentication standards (such as C2PA), and secure content credentialing systems embedded at the point of creation.

Why It Matters:

The rapid expansion of generative AI has intensified concerns around misinformation, deepfakes, intellectual property disputes, and reputational risk. Enterprises increasingly rely on AI-generated content for marketing, customer engagement, software development, and decision support. Without verification frameworks, trust in digital outputs can erode quickly.

Regulatory momentum is also accelerating. The European Union’s AI Act and broader digital governance initiatives emphasize transparency and traceability in AI systems. Organizations lacking content authentication mechanisms may face compliance exposure, brand damage, or legal disputes.

Risks to Consider:

- Implementation complexity across fragmented digital ecosystems

- Interoperability challenges between verification standards

- Additional infrastructure costs for cryptographic and metadata systems

- Limited adoption of universal authentication frameworks

- Potential performance trade-offs when embedding verification layers

AI Security Platforms

AI Security Platforms are integrated security frameworks designed specifically to protect AI systems, models, and data pipelines throughout their lifecycle. Unlike traditional cybersecurity tools that focus on network or endpoint protection, these platforms address risks unique to AI environments, including model theft, data poisoning, adversarial attacks, prompt injection, and model drift.

Core capabilities often include model monitoring, adversarial testing, dataset validation, access governance for training pipelines, explainability tools, and runtime threat detection across AI applications deployed in cloud or edge environments.

Why It Matters:

As organizations embed AI into mission-critical operations, AI systems themselves become high-value targets. Attack vectors increasingly focus on manipulating training data, exploiting model vulnerabilities, or extracting proprietary model weights. Security strategies designed for conventional IT systems are insufficient to manage these emerging risks.

Industry research highlights growing exposure. Gartner has projected that by 2026, organizations failing to secure AI systems adequately may face significant increases in model-related security incidents. Meanwhile, adversarial AI research demonstrates how minimal input manipulation can cause model misclassification in areas such as fraud detection, facial recognition, or autonomous systems.

Risks to Consider:

- Rapidly evolving AI-specific attack techniques

- Lack of standardized security frameworks for AI systems

- Limited in-house expertise in adversarial testing

- High monitoring overhead across distributed AI pipelines

- Regulatory uncertainty regarding AI liability and accountability

Geopatriation

Geopatriation refers to the strategic relocation or alignment of digital assets, data infrastructure, cloud workloads, and technology supply chains according to geopolitical, regulatory, and national security considerations. Instead of optimizing purely for cost efficiency or performance, organizations increasingly evaluate where data resides, where AI models are trained, and which jurisdictions govern their digital operations.

This trend encompasses sovereign cloud initiatives, data localization policies, regional AI model hosting, supply chain diversification, and cross-border compliance management.

Why It Matters:

Regulatory fragmentation, data sovereignty mandates, and shifting geopolitical alliances are reshaping global digital operations. Infrastructure location, AI model hosting, and cross-border data flows now carry legal and strategic implications beyond technical performance.

Organizations that fail to align digital architecture with geopolitical realities face compliance exposure, operational disruption, and increased systemic risk. Geopatriation reframes infrastructure strategy as a core component of enterprise risk management and long-term competitiveness.

Risks to Consider:

- Increased operational costs due to regional infrastructure duplication

- Complexity in managing multi-jurisdiction compliance requirements

- Vendor fragmentation across sovereign cloud providers

- Reduced economies of scale in globally unified architectures

- Strategic uncertainty caused by shifting geopolitical policies

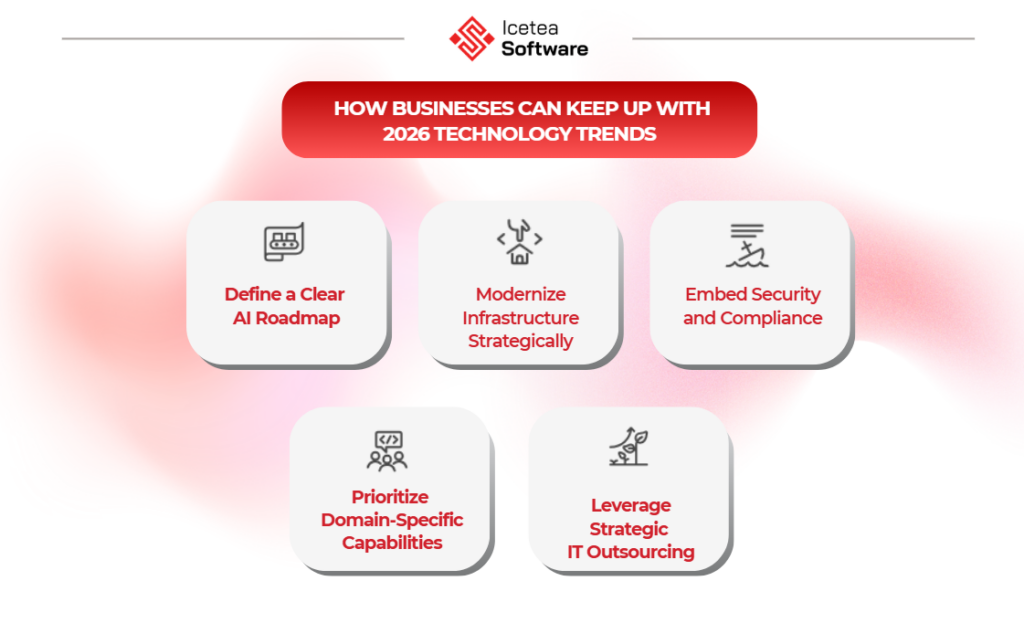

How Businesses Can Keep Up with 2026 Technology Trends

Awareness of emerging trends is not enough. Organizations need a structured approach that balances innovation, risk management, and cost control.

- Define a clear AI roadmap: Move beyond pilot projects. Align AI initiatives with core business objectives, measurable ROI, and long-term governance frameworks.

- Modernize infrastructure strategically: Ensure scalable cloud architecture, strong data governance, and secure AI-ready environments before scaling advanced systems.

- Embed security and compliance by design: Integrate DevSecOps, AI model monitoring, and proactive risk management early in the development lifecycle.

- Prioritize domain-specific capabilities: Invest in vertical AI solutions and proprietary datasets to increase accuracy and competitive differentiation.

- Leverage strategic IT outsourcing: Partner with experienced providers, such as engineering teams in Vietnam, to accelerate delivery, control costs, and access specialized AI talent.

Read more: IT Outsourcing in Vietnam: A Practical Look For Enterprises

Conclusion

Are you preparing for another year of technology upgrades, or for a structural reset in how your business operates? The forces shaping 2026 are not isolated innovations; they are redefining competitive logic across AI, security, infrastructure, and governance.

When intelligence becomes embedded into every workflow and trust becomes a strategic asset, hesitation carries a higher cost than experimentation. The defining challenge now is simple: will your organization adapt at the pace of transformation, or be shaped by it?

Contact Icetea Software so our expertise can help you answer the question!